WASHINGTON – Despite deploying similar algorithmic tools to advertise and accelerate growth to massive numbers of users – includings millions of teenagers – social media sites like Snapchat and TikTok have largely evaded the public and Congressional eye as of late.

That changed Tuesday, when representatives from TikTok, Snapchat and Youtube rejected claims that their algorithms have been misused to harm kids, amplify addiction and invade user privacy during a Senate Committee on Consumer Protection, Product Safety, and Data Security hearing.

And while Facebook, marred by weeks of damning national media coverage, did not have a representative present at Tuesday’s hearing, the company’s presence was felt early and often.

“Being different from Facebook is not a defense. That bar is in the gutter,” said Chairman Richard Blumenthal, D-Conn. “It’s not a defense to say that you are different. What we want is not a race to the bottom, but really a race to the top.”

Tuesday marked the third time in a month Blumenthal convened a hearing dedicated to protecting kids online. It was the first time that lawmakers heard from Snapchat and TikTok.

Representatives from all three companies promoted and defended a variety of new and existing features meant to protect young users.

“Between April and June of this year, we removed nearly 1.8 million videos for violations of our child safety policies,” said Leslie Miller, Vice President of Government Affairs and Public Policy at YouTube.

Snap, Inc. announced it was developing new tools designed to provide parents with more control over how their teens are using the app, but did not give a timeline for when those tools will be released.

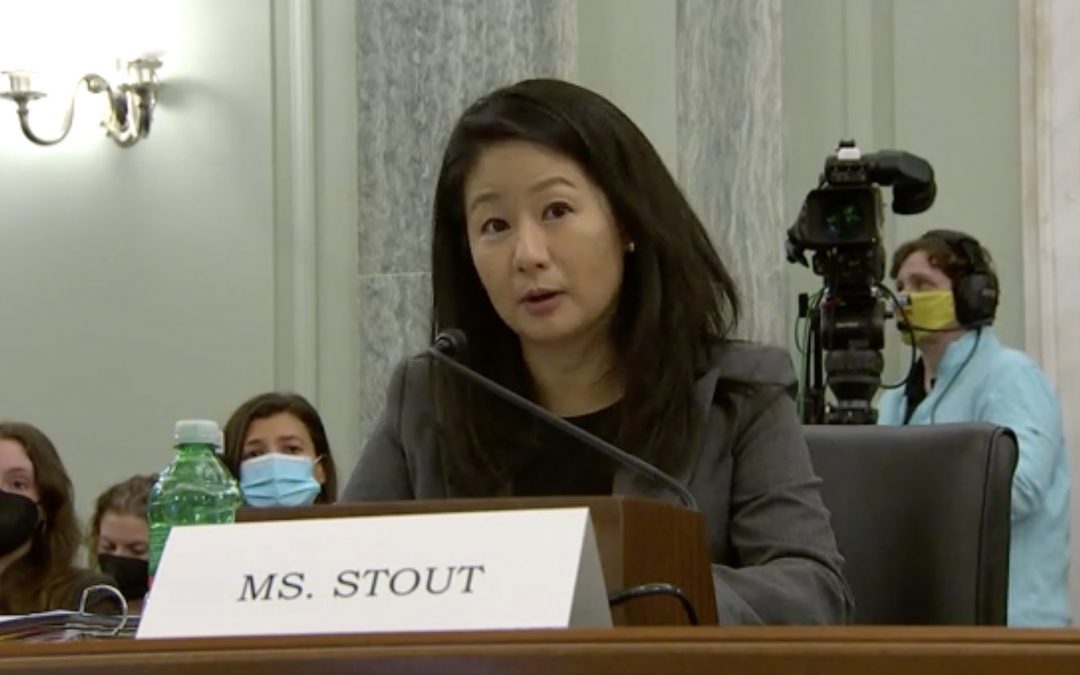

“Protecting the wellbeing of our community is something we approach with both humility and determination,” said Jennifer Stout, Vice President of Global Public Policy at Snapchat.

TikTok touted safety-driven limitations placed on teen accounts that are already in use, including prohibiting teen users from having public accounts, hosting live streams and sending direct messages on the platform.

“These are perhaps underappreciated product choices that go a long way to protect teens,” said Michael Beckerman, Vice President and Head of Public Policy at TikTok Americas. “We made these decisions, which are counter to industry norms or our own short term growth interests, because we’re committed to do what’s right.”

Nevertheless, lawmakers were skeptical, suggesting the companies have repeatedly prioritized rapid and consistent growth over the safety of their users, particularly teens.

“I don’t think parents are going to stand by while our kids and our democracy become collateral damage to a profit game,” said Sen. Amy Klobuchar, D-Minn.

Lawmakers from both sides of the aisle were in agreement that updated legislation is needed to hold social media sites in check.

When pressed to commit their company’s support to update legislation on internet regulation and Section 230 of the Communications Decency Act of 1996, witnesses from all three sites agreed an update is appropriate, though each was hesitant to pledge full support to any specific bill.

Sen. Blumenthal said the last few weeks represent a “moment of reckoning” for big tech companies, and that support for strict legislation was bipartisan.

“There has been a definite and deafening drumbeat of continuing disclosures about Facebook that have deepened America’s concerns and outrage, and have led to increasing calls for accountability,” Blumenthal said. “And there will be accountability. This time is different.”