WASHINGTON –– Cyber policy experts, tech auditors, and Congressional lawmakers from both sides of the aisle pushed for reforming cyberspace laws such as Section 230 of the Communications Decency Act, and called for transparency on data from social media platforms in a hearing held on Thursday.

“Americans deserve answers on how the platforms themselves are designed to funnel specific content to certain users and how that might distort users’ views and shape their behavior, both online and offline,” said Sen. Gary Peters, D-Mich. “It’s simply not enough for companies to pledge that they will get tougher on harmful content. These pledges have gone largely unfilled for several years now.”

This hearing was the second held by the Senate this week in an effort to understand the algorithms that spread harmful content online. On Tuesday, Congress received testimony from executives at Tiktok, Snapchat and Youtube. Each offered solutions to better monitor illicit content, but lawmakers remained skeptical of their commitments.

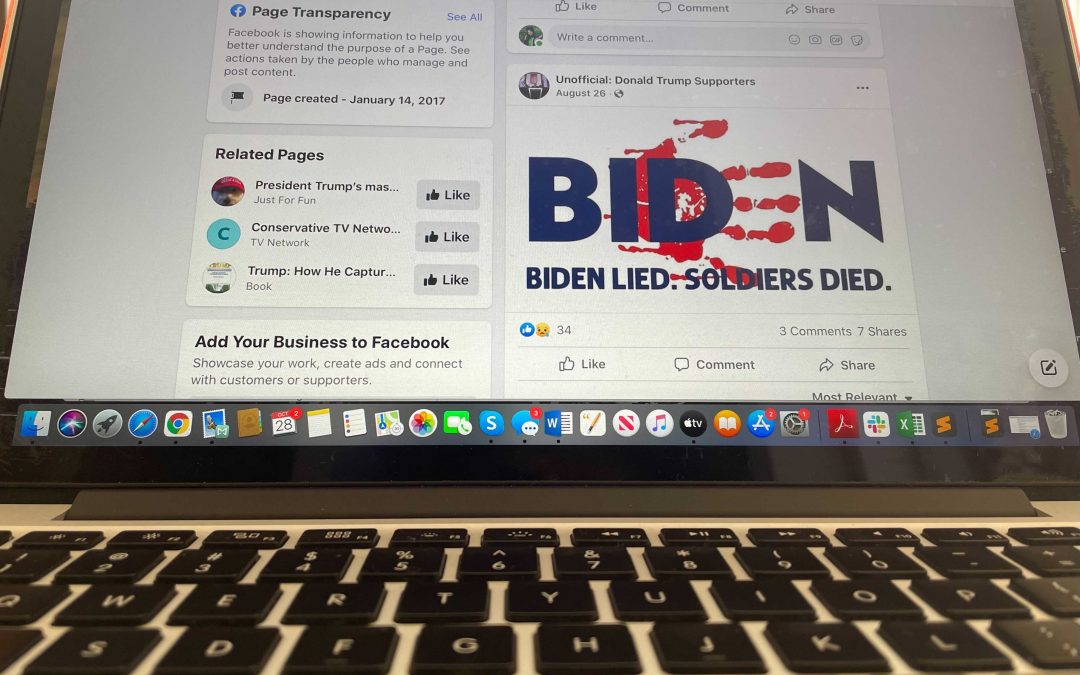

Calls for regulation of social media platforms have intensified in the aftermath of the events of the Jan. 6 insurrection at the Capitol. Facebook has come under fire for its role in mobilizing and amplifying violent protests across its platform.

Internal research documents, now called the “Facebook Papers,” leaked by whistleblower Frances Haugen, revealed how company executives chose to let fake news and misinformation spread across the platform to keep more users engaged and logged on. The documents also showed that Facebook executives were aware of the negative effects its sister platform, Instagram, had on the mental health of teenage girls, and how drug cartels and sex traffickers openly used it to conduct illegal business.

In her testimony before Congress earlier this month, Haugen said Facebook chose growth over safety, and neglected evidence of the harm its algorithms generated. She called on more robust regulation and monitoring when it came to investigating Big Tech companies and their policies.

Sen. Robert Portman, R-Ohio, echoed this need for cyber reform. He said that this “exploitation of social media for nefarious purposes” is not only limited to crimes of terrorism, but is also used by foreign adversaries and a host of other threat actors. He referenced how China and Russia utilized these platforms to conduct influence campaigns targeting Americans, including interference in U.S. elections. Portman also said that congressional testimonies from 2016 revealed that ISIS had used social media platforms to advance its terrorism goals, accelerate recruitent effortments and mobilize violence in Iraq and Syria.

“This raises important questions about whether or not it is time to revisit the immunity provided by Section 230,” said the senator.

Cyber policy expert Karen Kornbluh testifies before lawmakers at a hearing held Thursday. (Senate Committee on Homeland Security and Governmental Affairs)

Section 230 of the 1996 Communications Decency Act provides websites and online platforms with immunity from legal liability when it comes to the activities of its users. This has allowed social media giants to escape accountability when it comes to violence and harmful content spread and organized on its platforms. Recent events have called for a re-examination of this law and whether circumstances should still allow for it to grant such exemptions.

“These billion and trillion dollar companies have the resources to improve systems, hire additional staff and provide real transparency. Yet, they claim it’s too burdensome,” said David Sifry, a tech expert from the Anti-Defamation League. “How many lives will be lost before Big Tech puts people over profit?”

Karen Kornbluh, a digital innovation expert, said Facebook’s internal documents revealed that its moderation process catches only a small percentage of harmful content: just 3 to 5% of hate speech and only 0.6% of content that depicts or incites violence.

Kornbluh suggested that as Congress revises existing legislation and seeks to pass a new bill on cyber policies, they should consider introducing a “digital code of conduct” that could help tackle algorithmic radicalization while protecting freedom of expression. She also said that platforms should commit to transparent third party audits in the same way a black box operates to improve aviation regulations and safety protocols.

“We shouldn’t need a whistleblower to access data,” Kornbluh said.