WASHINGTON – From text suggestions while writing emails to playing games like chess or solitaire against a computer, artificial intelligence, or AI, has been a part of the daily lives of Americans for years. With the rapid acceleration of generative AI technology in recent years, though, AI is appearing in new ways and spaces – including the U.S. workforce.

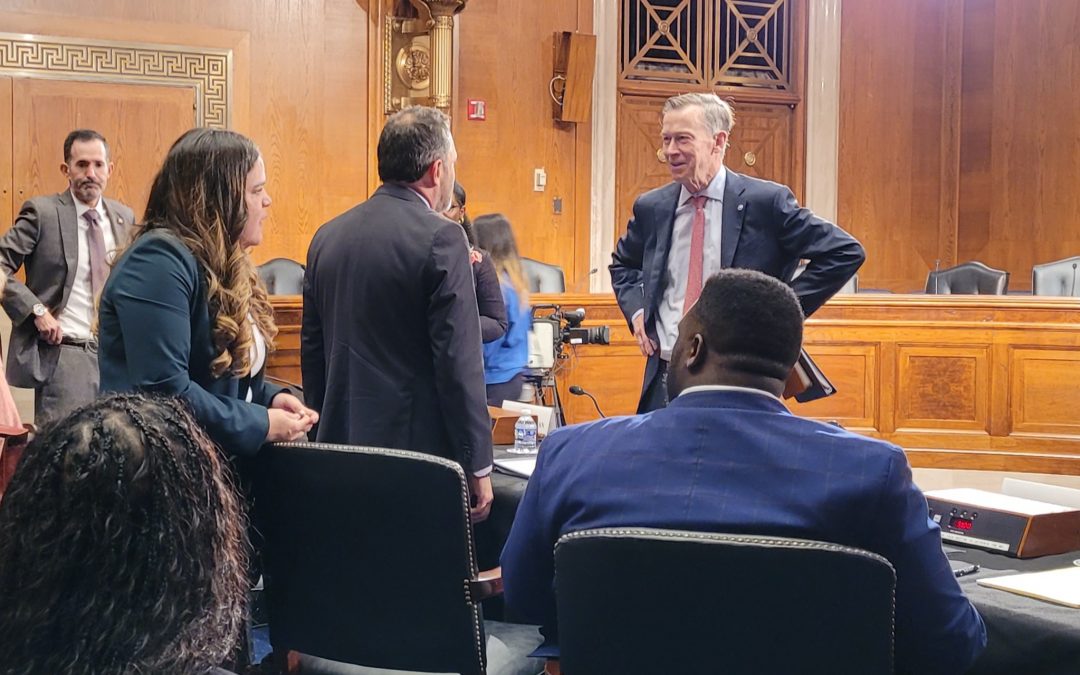

“This technology has the potential to positively alter the way that literally all of us work,” said Chairman of the Senate Subcommittee of Employment and Worker Safety, Sen. John Hickenlooper, D-Colo. The subcommittee held a hearing Tuesday morning, consulting with tech and AI experts on AI and the future of work.

The use of generative artificial intelligence has already begun to appear in different industries across the U.S. workforce and with it have come questions – both in terms of how to regulate the technology and what the impact will be on U.S. jobs.

“I think we have an imperative to do it right, to make sure we’re not making missteps, as we move so rapidly in this direction,” Hickenlooper continued. “I think working together and including workers in that conversation is essential.”

While industries with a high likelihood of being impacted by AI are hopeful about the capabilities of the technology, a Pew Research study found that approximately 64% of Americans believe AI has the potential to cause significant harm to workers.

“It brings us to an interesting crossroads, with something that looks like it can do so much, that can be so beneficial, but also looks like the malfeasance that can come from it, forewarned by the people who know the most about it, should give you pause,” said Sen. Mike Braun, R-Ind., ranking member of the subcommittee.

Bradford Newman, a litigator and advisor specializing in AI, said the potential “nefarious” uses of AI in U.S. include its ability to create false images and voice imitations, the potential to interfere with elections, and promote cyberattacks.

Tuesday’s hearing comes just one day after President Joe Biden issued an Executive Order establishing sweeping standards for artificial intelligence. Included in Biden’s order are AI safety and security standards, privacy protections, and calls to develop ways to best support workers while maximizing the potential benefits of AI in the workforce.

Senators asked whether AI integration into the workforce would result in job displacement, should AI be utilized to do tasks that were done by human workers priorly. Representatives from companies like Accenture and Workday emphasized AI being a supplementary tool, while also pressing on the need to make workforce training more accessible for tech and newly created AI-related jobs.

“Jobs will not be done either by humans or robots, but by humans enhanced by AI,” said Mary Kate Morely Ryan, a representative of Accenture, a global professional services company that not only utilizes AI, but provides services on how to help other businesses do the same.

Transforming the workforce will require assessing the impact AI will have on existing jobs, determining what skills would be needed for the future workforce, and providing that skill training, Morley Ryan said. Additionally, she expressed a need for government and companies to come together on a “responsible” framework of regulations for AI.

As of Sept. 10, nearly half the states in the U.S. had either proposed or enacted AI legislation, according to a legislation tracker created by a global law-firm also mapping regulatory efforts across the European Union. However, Newman, who serves as co-chair of the AI subcommittee for the American Bar Association, cautioned against leaving regulation up to individual states.

“Companies that develop AI and deploy AI technology in the workforce, and the workforce itself, deserve a rational solution that delivers clarity and consistency on a national level,” he said.

Newman proposed that federal legislation, rather than focus on a generalized regulation approach, should instead hinge on prohibited-use cases and providing “guardrails” to the technology. He warned that creating overly “burdensome” regulations would result in only larger companies being unable to utilize AI to its full potential, while alienating smaller businesses that lacked the resources and finances to comply.

“A rational risk based approach would ensure that all AI developers have the resources to comply and participate in the opportunities presented by AI workforce ecosystem,” said Newman. “The developers of this technology want to do the right thing and are eager to work with a bipartisan group of federal legislators to get this right.”